EHS runs on trust. The work only functions when people believe the system is built to protect them. Employees need to feel safe enough to surface hazards and near-misses without worrying they’ll be personally blamed.

EHS leaders know this intimately. They’ve spent years building that trust through countless conversations, incident responses and proving that speaking up actually leads to change. They know how hard trust is to earn and how fast it can disappear.

So when AI shows up promising to make EHS smarter, the natural skepticism from many EHS leaders makes sense. AI needs to earn its keep. Plus, EHS has a different risk profile than the rest of the business. A flawed algorithm in marketing, for example, will waste budget. But a flawed algorithm in safety can miss the signal that would have prevented harm or even saved a human life.

That’s the tension with AI in this industry. Done right, it can surface patterns humans miss and make safety work genuinely proactive. Done wrong, it’s a black box making decisions no one can explain.

Evotix partnered with What Works Institute to survey more than 50 EHS leaders and host a roundtable with senior executives across manufacturing, utilities, construction and mining. We wanted to hear what EHS practitioners actually want from AI and identify the conditions that have to be true before it earns a real place inside the program.

Here’s what we found out:

Most organizations are still working through what AI in EHS actually looks like in practice.

42% of EHS executives said they’re piloting AI in select EHS processes or sites, and 33% are still exploring use cases. Only 8% reported scaling AI across multiple EHS functions or regions. None of the respondents described AI as a core business capability inside EHS today.

The AI adoption curve indicates that leaders are treating AI like any other safety program transformation: cautiously, deliberately and with a high bar for proving value.

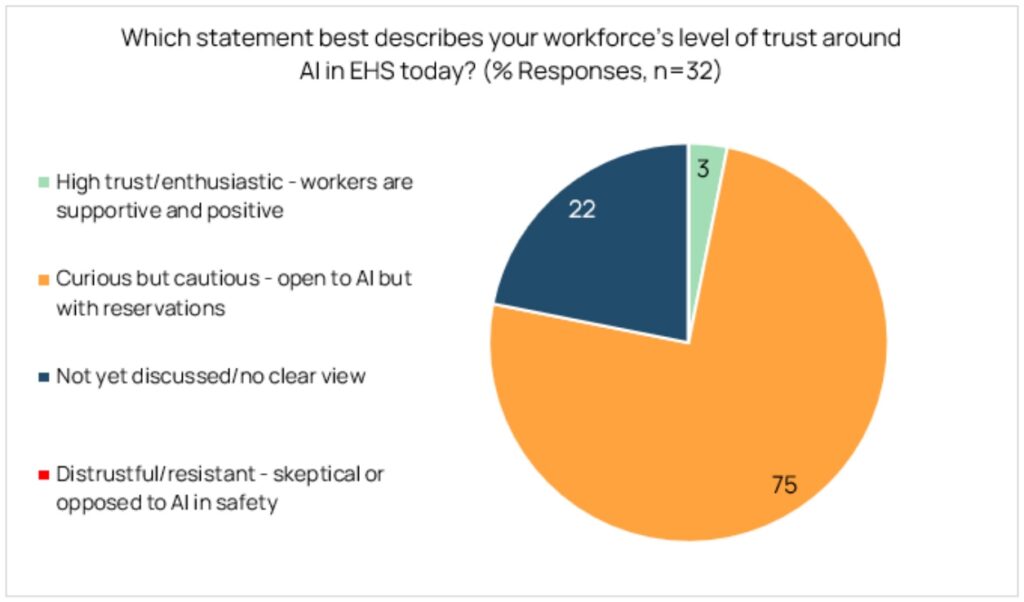

Workforce trust mirrors that reality. When asked how employees feel about AI in EHS today, 75% of respondents described workers as curious but cautious. 22% said it hasn’t been discussed yet or there’s no clear view. Only 3% reported high trust and enthusiasm. AI is being evaluated in real time, by real people, based on how it’s introduced and what it’s used for.

Broadly speaking, EHS leaders are looking for specific outcomes (not just theory) that they want AI to deliver inside their programs.

EHS teams lose hours every week to tasks that are necessary, but time-consuming: reporting, documentation, pulling together dashboards, drafting updates, summarizing incidents and answering the same questions for multiple people across departments.

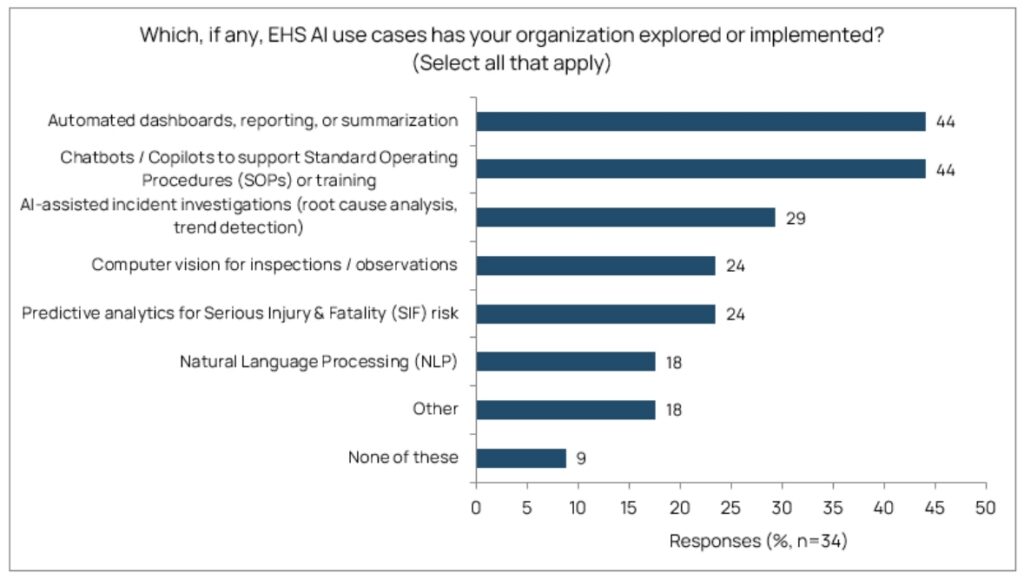

So it’s not surprising that the most common AI use cases are practical and workflow-adjacent. Among organizations using AI, 44% cited automated analytics, dashboards, reporting or summarization. Another 44% cited chatbot or copilot support tied to SOPs or training.

This is where AI can genuinely help, without needing to run the entire program. It reduces admin load and frees up EHS teams to spend more time doing strategic work that keeps people safe.

EHS teams need better visibility into weak signals and precursor patterns that get buried until something serious happens.

The research points to real appetite for predictive analytics and trend detection that can surface SIF precursors and emerging risks earlier. That shows up in work that looks like faster synthesis of leading indicators, clearer cross-site trend visibility and stronger early warning signals for serious risk.

Safety guidance is not as useful as it could be when it lives in PDFs, spreadsheets or personal desktops. Leaders want AI that makes safety knowledge usable at the point of work.

The report calls out “just-in-time guidance” as a major opportunity: PPE lookups, checklist walkthroughs, first-draft incident summaries and other practical support that helps frontline teams act faster without stopping production to hunt for answers.

Of course, they also want training, program development and continuous improvement. Respondents identified potential for AI in developing tailored training content, identifying skill gaps and supporting overall program design.

This was one of the clearest boundaries executives drew.

Leaders were especially direct about investigations, interviews and causal analysis. Those aren’t tasks you automate without changing the integrity of the outcome. The report explicitly states that these areas require human judgment and context that AI cannot replicate today, and that “human-in-the-loop” is non-negotiable for sensitive decisions.

Even when leaders see the value, they’re still thinking about the risks. It’s in the job, after all. The top concerns were: accuracy/reliability/algorithmic bias (58%), privacy and potential surveillance (39%) and explainability/transparency (36%).

The report makes the point that many EHS teams still lack domain-specific AI policies, which creates the risk of fragmented, ad hoc adoption across sites.

The leaders who seem most prepared for AI are the ones putting governance in place early, with cross-functional involvement, clear boundaries and clear accountability.

Everything EHS leaders want from AI depends on one unglamorous thing: the quality of the data underneath it.

“Garbage in, garbage out” was the headline risk discussed at the roundtable. Leaders cited inconsistent taxonomies, unstructured narratives and variable data quality as the biggest barriers, often requiring a step in the process to cleanup and standardize their data before implementing AI and seeing real value from it.

AI can accelerate reporting and surface patterns that would otherwise be lost in noise. But when there aren’t reliable inputs, it’s unlikely the outputs will be any better. EHS teams can’t afford to make decisions on shaky ground.

Here’s where EHS teams are focusing their efforts:

Share